vSphere 5.1: vMotion with no Shared Storage

In vSphere 5.1, vMotion without shared storage was introduced. Frank Denneman has mentioned here there is no named to this features though many has given names like Enhanced vMotion, etc.

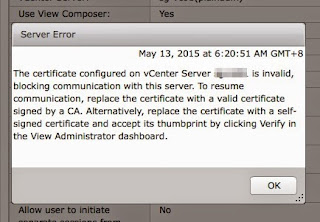

Some have tried to perform this but realize even though they have upgraded to vSphere client 5.1, it still show greyed out and given a message to power off the VM. This is because, in vSphere 5.1, all new features enhancement will only be found in the Web Client. In such, the C# client will not have this option.

So using the Web Client, I was able to perform this vMotion in my home lab where I do not have any shared storage other than the local disk of each ESX servers or across two different clusters which have shared storage within there respective cluster.

Do note that you can only perform 2 concurrent vMotion without shared storage at one time, any additional will be queued. Also the total of such vMotion adds to the total of concurrent vMotion (max of 8) and Storage vMotion (2 per host or 8 per data store). So e.g. if 2 of such vMotion is conducted, then you are remain with 6 available vMotion or Storage vMotion. Those that are in queue will be process on a first in first out basis.

Multi-nics are supported for vMotion. This will help reduce the time especially when trying to use this new vMotion on a big size VM.

Here I have did a demo on this feature and how it got vMotioned without any interrupt. In fact, I was surprised I do not even found a single ping drop.

A short note here. In case you are wondering, you can also use this new vMotion without Shared Storage to perform a "Storage vMotion" for a power off VM to relocate its disk placement to another ESXi host.

Citrix XenServer 6.1 and Microsoft Hyper-V 3 has also Live Migration with no shared storage features. For Citrix, you would need to use command and command console to monitor progress. For Microsoft, there will be still some manual work like typing destination host name where a selection list can do that job. Both do not support multi-nic migration. Thinking about moving a 500GB or even 1TB size VM?

Some have tried to perform this but realize even though they have upgraded to vSphere client 5.1, it still show greyed out and given a message to power off the VM. This is because, in vSphere 5.1, all new features enhancement will only be found in the Web Client. In such, the C# client will not have this option.

So using the Web Client, I was able to perform this vMotion in my home lab where I do not have any shared storage other than the local disk of each ESX servers or across two different clusters which have shared storage within there respective cluster.

Do note that you can only perform 2 concurrent vMotion without shared storage at one time, any additional will be queued. Also the total of such vMotion adds to the total of concurrent vMotion (max of 8) and Storage vMotion (2 per host or 8 per data store). So e.g. if 2 of such vMotion is conducted, then you are remain with 6 available vMotion or Storage vMotion. Those that are in queue will be process on a first in first out basis.

Multi-nics are supported for vMotion. This will help reduce the time especially when trying to use this new vMotion on a big size VM.

Here I have did a demo on this feature and how it got vMotioned without any interrupt. In fact, I was surprised I do not even found a single ping drop.

A short note here. In case you are wondering, you can also use this new vMotion without Shared Storage to perform a "Storage vMotion" for a power off VM to relocate its disk placement to another ESXi host.

Citrix XenServer 6.1 and Microsoft Hyper-V 3 has also Live Migration with no shared storage features. For Citrix, you would need to use command and command console to monitor progress. For Microsoft, there will be still some manual work like typing destination host name where a selection list can do that job. Both do not support multi-nic migration. Thinking about moving a 500GB or even 1TB size VM?

Comments

Very handy, thanks for demonstrating 5.1 with no shared storage.

A couple of questions;

1) what are you using for your home lab? I see you are using a mac?

2) Why hide your private IP's?

Thanks very much, incredibly useful.

G.

2. I hide my IP as I do not want anyone to access my home lab in case if there is any breakthrough. Its a safety measure.

1) awesome

2) fair point :)

How did you setup your NICS for this? i.e are you using 2 NICS or more?

Thanks,

G.

I didnt used best practice here since it is whiteboxes. I have used 2 nics for all management, VMKernel as well as VM network.

2 nics for only two hosts setup.